In a world where robots are still learning to understand and mimic human behavior, a groundbreaking project named EgoMimic stands out. EgoMimic is no ordinary tool; it’s an entire framework that captures, aligns, and scales human actions as seen from a first-person view — essentially giving robots a new vantage point on human dexterity and nuance. This isn’t about simple gestures or basic tasks but about refining how robots learn from the rich, often unnoticed intricacies of human hand movements.

By capturing human actions through a pair of advanced “smart glasses” (Meta’s Project Aria), EgoMimic scales up imitation learning in ways previously unimaginable. This framework doesn’t just rely on large datasets of robotic motions; it lets robots learn from human experiences directly, merging two worlds. The result? A system where adding an hour of human demonstration can be exponentially more impactful than traditional robot-only learning. EgoMimic aims not just for robotic mimicry but for true cross-domain learning, which makes robots as flexible and adaptable as we are in unpredictable environments.

Training with Human Embodiment Data

With EgoMimic, human data is not merely supplementary; it’s central. Traditional imitation learning required tedious robotic data collection — typically through teleoperation — but EgoMimic flips this notion. Using egocentric data, it allows robots to “see” the world as we do, from the perspective of the human hand performing the task. Project Aria glasses capture a 3D view of human hand motion, transforming it into a shared language robots can interpret.

Why does this matter? Because hands are incredibly precise. Whether it’s folding a shirt or placing items in a bag, our hands perform these tasks with an intuitive understanding of space, force, and sequence. EgoMimic leverages this knowledge, aligning these human data sources with robotic sensors, so robots don’t just replicate the action but “understand” it in the context of a unified, scalable policy. This level of alignment could lead to an era where robots no longer struggle with subtle variations in task performance, setting the foundation for truly versatile machines.

Merging Human and Robotic Data

A core challenge for EgoMimic is translating human data into a robotic language without losing its essence. Robots move differently from humans — they are rigid, predictable, whereas we are fluid, adaptive. EgoMimic tackles this by harmonizing human actions with robotic actions through meticulous calibration and normalization. It transforms human hand trajectories into stable, camera-centered reference frames that robots can interpret consistently.

By masking the appearance of hands and robotic arms and using alignment techniques, EgoMimic minimizes visual differences. This makes the robot perceive the world as closely as possible to how humans see and interact with it. For instance, it ensures the hand of a human operator and the “hand” of the robot behave similarly in 3D space, even if their forms differ. Such a bridge fosters a seamless learning process that yields remarkably accurate results, making robots more adept at tasks that demand precision and finesse.

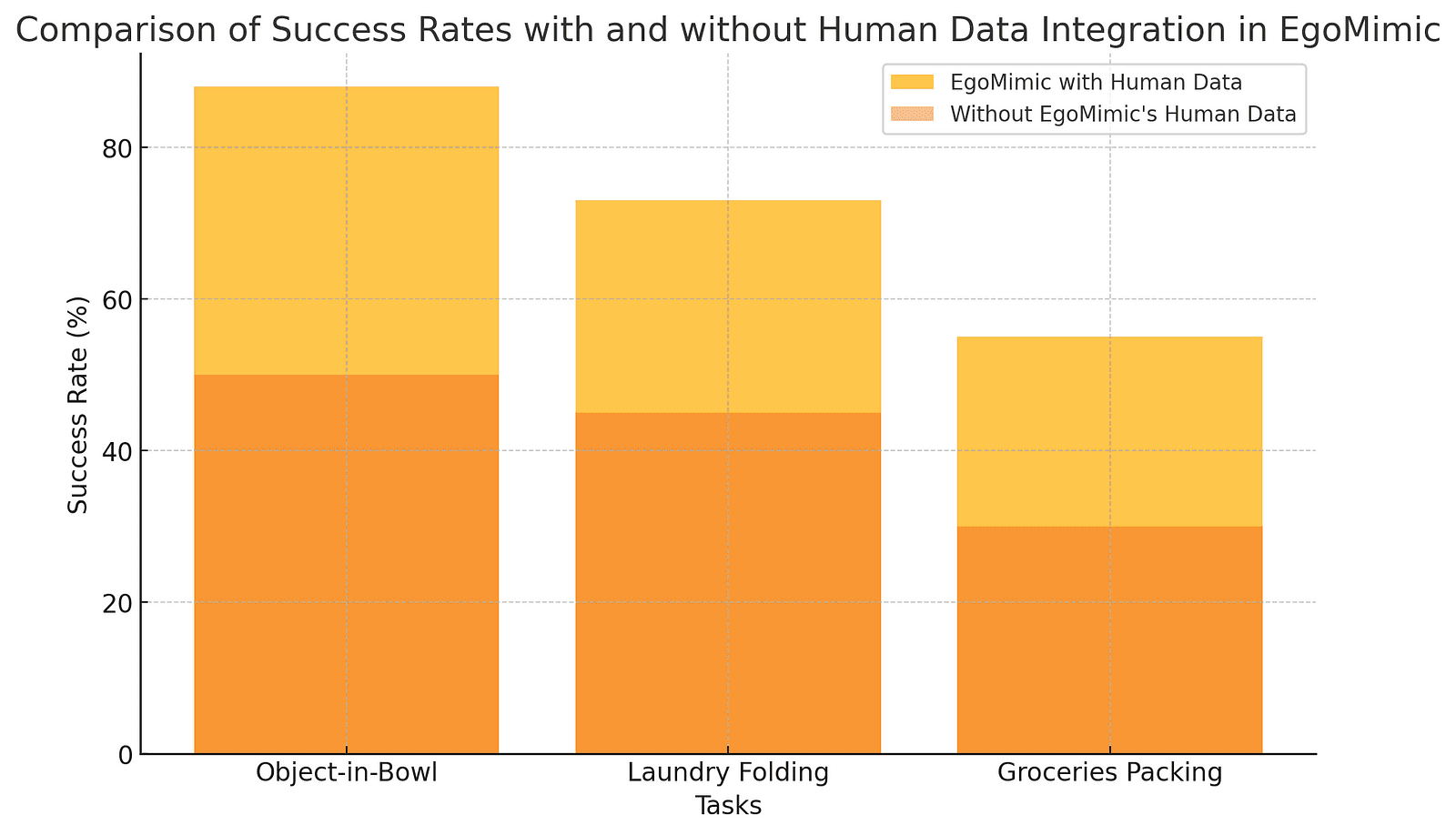

To illustrate the impact of human data integration, the graph below shows the success rate improvements across various tasks when using EgoMimic’s egocentric learning framework.

Uniting Human and Robot Learning

EgoMimic doesn’t stop at imitation — it’s built for scalability. One hour of human data can yield as many as 1,400 demonstrations, compared to just over 100 from a robot alone. This wealth of data accelerates learning by orders of magnitude, making EgoMimic ideal for environments where diversity and adaptability are critical. Even more compelling, EgoMimic generalizes: when trained on varied human demonstrations, the framework showed robust performance on entirely new objects and unfamiliar scenarios.

Imagine the potential. Robots learning from vast amounts of human data could perform everything from surgical assistance to intricate assembly tasks without retraining. In short, EgoMimic suggests a future where robots evolve by drawing from a rich, ever-expanding pool of human experience, bridging the gap between limited, pre-defined actions and flexible, real-world adaptability.

What Makes EgoMimic Unique

Turning Human Data into Robotic Intelligence

EgoMimic’s system for data alignment uses a unique structure that integrates human hand and robot manipulator data, creating a seamless learning pipeline that enhances robotic versatility.

Egocentric Perspective: Seeing Through Human Eyes

Project Aria’s egocentric view offers a first-person, task-focused perspective that captures human interaction at its most detailed, transforming this rich view into actionable robotic data.

Low-Cost Scalability for Widespread Use

By designing for compatibility with low-cost equipment, EgoMimic makes advanced imitation learning accessible and scalable, paving the way for broader deployment across industries.

Cross-Embodiment Learning: Shared Knowledge, Seamless Execution

This unified approach treats both human and robotic data as primary learning sources, eliminating the need for separate modules and allowing for continuous, integrated policy learning.

Efficiency Beyond Robot-Only Learning

Studies with EgoMimic show that even a single hour of human data can boost performance by over 100%, reducing the need for labor-intensive, robot-specific data collection.

Towards a Future of Limitless Robot Potential

EgoMimic is more than a framework; it’s a paradigm shift in how we teach machines to think, act, and adapt. By merging human embodiment data with robotic capability, it opens doors to robots that aren’t just functional but truly capable of working alongside us in dynamic, real-world environments. EgoMimic is, in essence, a bridge to a future where robots learn as flexibly as humans, scaling to meet the complexities of a world in constant flux. The promise it holds is nothing short of revolutionary.

About Disruptive Concepts

Welcome to @Disruptive Concepts — your crystal ball into the future of technology. 🚀 Subscribe for new insight videos every Saturday!

See us on https://twitter.com/DisruptConcept

Read us on https://medium.com/@disruptiveconcepts

Enjoy us at https://disruptive-concepts.com

Whitepapers for you at: https://disruptiveconcepts.gumroad.com/l/emjml