Modern language models, armed with towering stacks of neural layers, show an uncanny ability to traverse different languages, process code, and even parse images and sound. The latest insights revolve around a theory called the Semantic Hub Hypothesis. Picture this: a mental marketplace within the model where representations of different data types — English sentences, JavaScript code, or a blurry photograph — convene, converge, and map onto each other in uncanny similarity. This shared space, akin to the transmodal semantic hubs found in the human brain, holds the key to the model’s multimodal prowess.

At first glance, this might seem like a mere technical flourish — just another fancy feature of neural networks. But it goes deeper. Experiments reveal that models like Llama-3 navigate multilingual and multimodal inputs by first mapping them into this collective space. An English phrase sits right next to its Chinese twin, and code whispers its functional meaning next to prose. This seamless association hints at a hidden organization that powers the flexibility of modern AI. But what does this mean for our understanding of intelligent systems?

The Journey from Input to Insight

For a model processing a line of code like “print(‘Hello, World!’)”, the analysis doesn’t stop at the code syntax. The middle layers reinterpret the command, connecting it to the model’s dominant linguistic space — often English. At its core, the model seems to think: “Oh, this line outputs text, like saying ‘hello’ in any language.” The Semantic Hub Hypothesis posits that these intermediary representations form the true stage of AI comprehension. In them, an image isn’t just pixels; it becomes “a dog running in the park,” comparable to a sentence that describes it.

Consider the logit lens, a method for peering into these mysterious middle layers. When probing these sections of Llama-3 with code snippets, researchers found a curious thing: the representations were most akin to English words — even if the code itself was in a foreign tongue. This tendency stretches across data types, meaning that regardless of whether the input is a melody, a number equation, or an image, the model’s thoughts travel through this multilingual, modality-agnostic space.

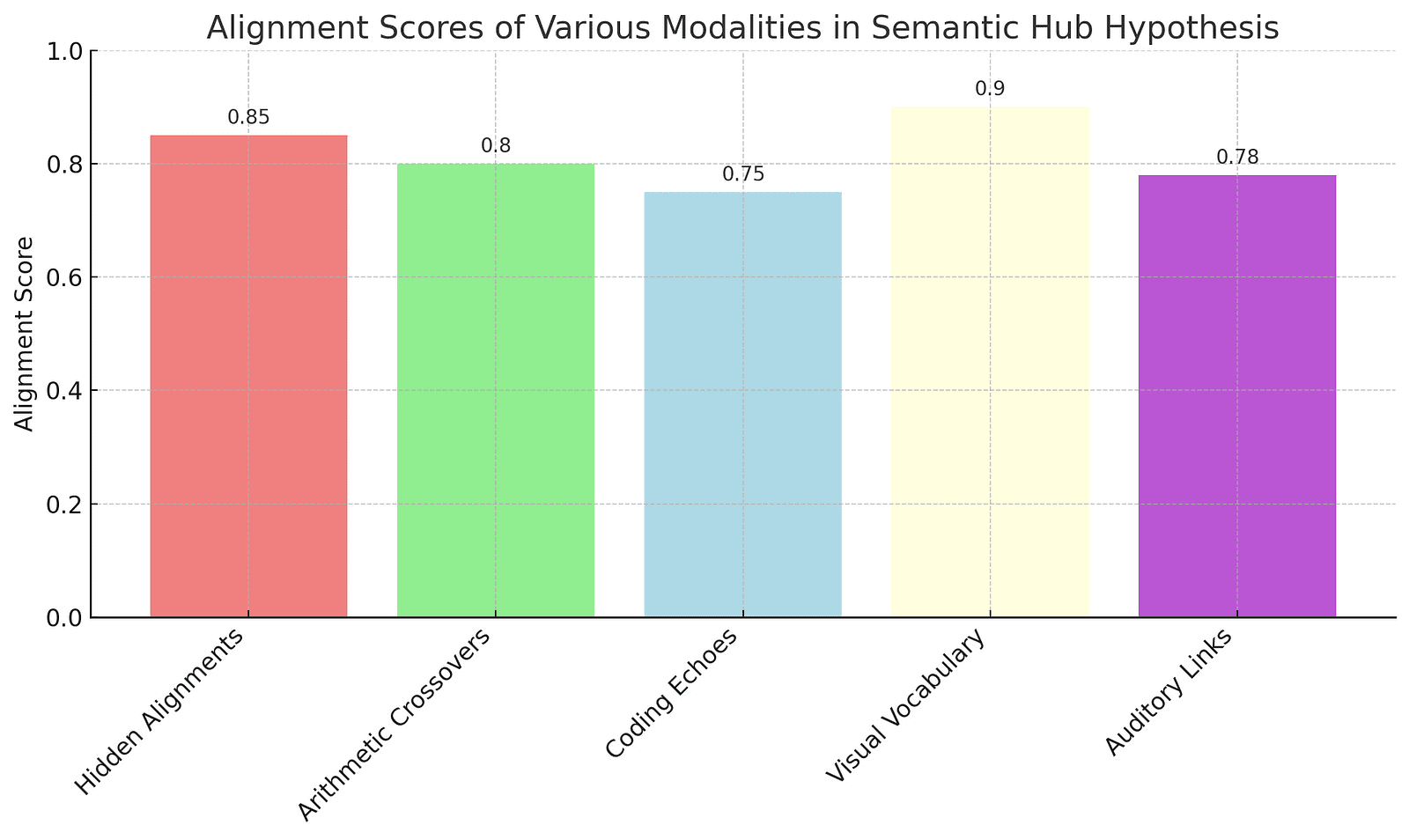

Below is a graph showcasing the alignment scores across various data modalities, reinforcing the idea of a shared semantic hub within language models.

Intriguing Signs of a Semantic Nexus

Hidden Alignments

Intermediate layers of language models align semantically equivalent sentences from different languages. Chinese phrases align with their English counterparts as if mentally translated on the fly.

Arithmetic Crossovers

Arithmetic operations and their spoken equivalents share a representational bond, implying that the model “thinks” in English even when calculating.

Coding Echoes

Code snippets parsed by a model reflect representations that evoke natural language explanations, bridging programming and human speech.

Visual Vocabulary

When fed images, vision-language models map colors and objects onto corresponding English terms in their hidden states, echoing internal captions.

Auditory Links

Audio inputs trigger language models to align their hidden states with words describing the sounds — as if hearing “a cello” means “thinking” the word.

Toward a Unified Digital Consciousness

These findings challenge us to reconsider the boundaries between data types and language. If a model can relate code to speech or images to sounds through a single semantic hub, the implications are profound. We’re not just training models to be good at one thing; we’re nudging them toward a latent universal comprehension, akin to a spark of general intelligence. This connective framework offers not only more adaptable and powerful models but suggests that the next leap in AI will come not from expansion but from deeper integration — a cognitive symphony across modalities.

About Disruptive Concepts

Welcome to @Disruptive Concepts — your crystal ball into the future of technology. 🚀 Subscribe for new insight videos every Saturday!

See us on https://twitter.com/DisruptConcept

Read us on https://medium.com/@disruptiveconcepts

Enjoy us at https://disruptive-concepts.com

Whitepapers for you at: https://disruptiveconcepts.gumroad.com/l/emjml