AI is now painting light itself — how neural rendering reshapes reality.

The future of digital realism isn’t just about creating more detailed textures or higher resolutions — it’s about mastering the unseen forces of light. The way light interacts with objects has long been a puzzle for both artists and engineers. Traditional rendering methods rely on meticulous physics-based calculations, but they falter in real-world scenarios where perfect data is impossible to capture. Enter DIFFUSIONRENDERER, a neural system that challenges conventional rendering by leveraging AI-powered video diffusion models to reconstruct both inverse and forward rendering with unprecedented accuracy. This breakthrough could redefine image synthesis, from game design to CGI and even real-world augmented reality applications. But how does it work, and what makes it so revolutionary?

The Rise of Neural Rendering: Beyond Pixels and Polygons

Neural rendering is more than just another step in graphics processing; it’s a paradigm shift. Traditional rendering methods, such as physically based rendering (PBR), simulate how light interacts with 3D geometry. This approach, while effective, demands explicit knowledge of scene parameters — everything from object geometry to material properties and light sources. Neural rendering, by contrast, learns patterns from vast datasets, allowing it to approximate realistic light transport even when key scene data is missing.

DIFFUSIONRENDERER takes this a step further by employing video diffusion models, which decompose an image into essential scene attributes like surface normals, material roughness, and metallic properties before reconstructing a photorealistic output. This method significantly improves upon previous techniques by reducing the need for precise 3D models, making AI-powered rendering a practical solution for real-world applications where obtaining exact scene data is often infeasible.

Forward and Inverse Rendering: AI’s Double Vision

Rendering can be divided into two broad tasks: forward rendering, which generates realistic images based on given scene information, and inverse rendering, which extracts that information from existing images or videos. DIFFUSIONRENDERER accomplishes both, seamlessly transitioning between the two modes with the help of pre-trained video diffusion models.

With inverse rendering, the model estimates intrinsic scene properties like geometry, material properties, and lighting conditions. This is a game-changer for image editing, enabling applications such as relighting and object insertion without requiring manual scene reconstruction. Once scene attributes are estimated, forward rendering can take those parameters and produce a photorealistic rendering under any desired lighting conditions. Unlike traditional rendering, which requires precise physics calculations, this method learns to synthesize realistic images directly from training data.

One of the most powerful applications of this duality is real-time relighting, where an image or video can be dynamically altered to simulate different lighting conditions without requiring new scene captures. Imagine a filmmaker being able to change a sunset into midday lighting instantly, or a game developer adjusting in-game illumination dynamically without pre-baking light maps.

The Power of Data: Training AI to See the World

The accuracy of neural rendering depends largely on the quality of training data. DIFFUSIONRENDERER’s training pipeline is an intricate mix of synthetic and real-world datasets. Using curated 3D models, environments, and physically based rendering techniques, millions of training samples are generated to teach the AI how light behaves. To bridge the gap between synthetic perfection and real-world imperfection, DIFFUSIONRENDERER automatically labels real-world video datasets, extracting scene properties using its inverse rendering model.

This combination allows the system to generalize across different environments, ensuring robust performance whether it’s reconstructing a photorealistic car interior or estimating the lighting in an outdoor scene. The result is an AI that doesn’t just generate images but understands the physics behind them.

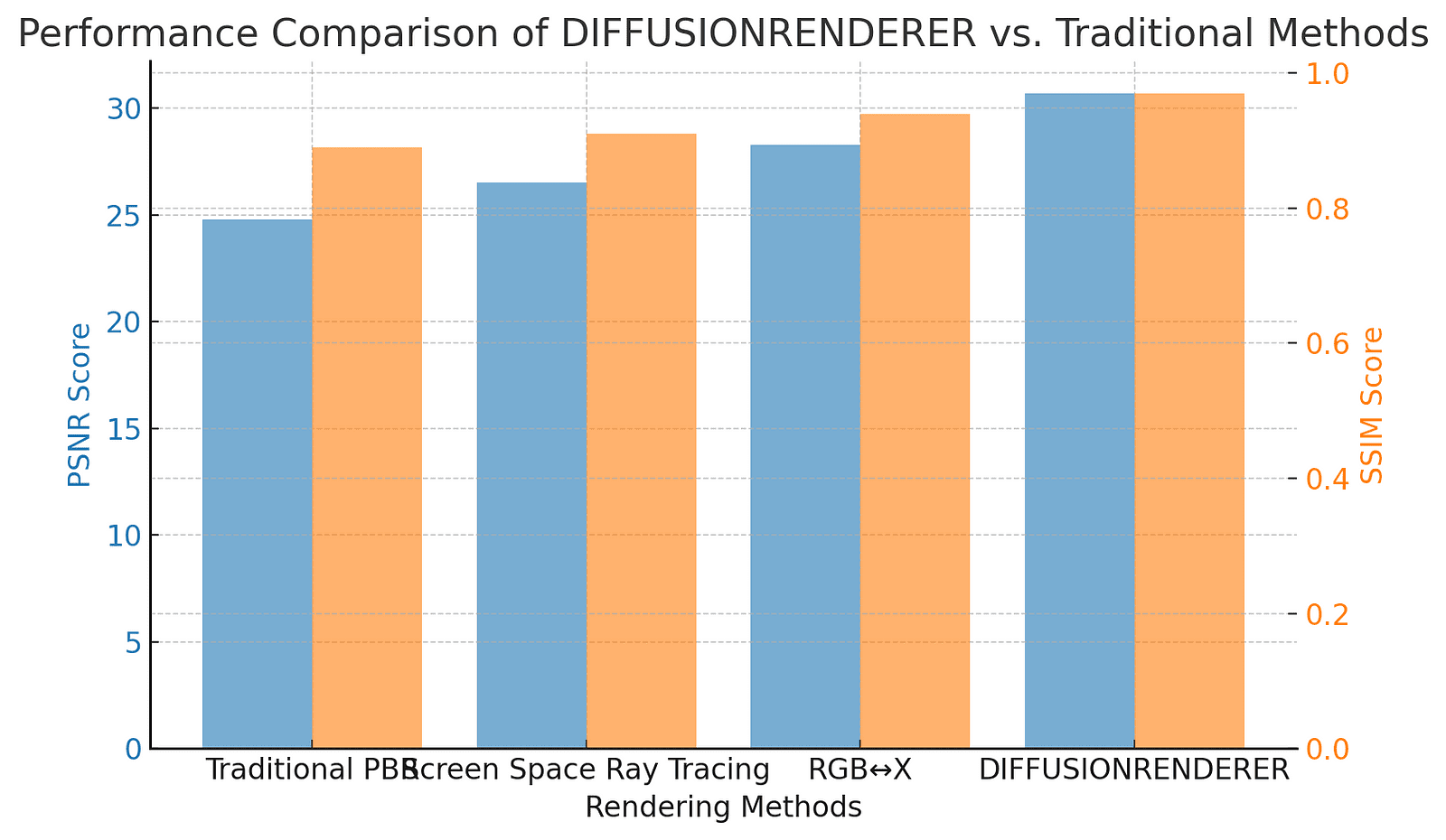

Performance Comparison of DIFFUSIONRENDERER vs. Traditional Methods

A key measure of success in rendering is how closely the AI-generated results match ground truth images. Below is a performance comparison of DIFFUSIONRENDERER against other rendering methods using PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index):

How AI Masters Light and Shadow

Light is the defining factor in realism, and AI has learned to manipulate it in ways never before possible. DIFFUSIONRENDERER can estimate the direction, intensity, and interaction of light within a scene, making it a powerful tool for relighting and enhancing visual accuracy in CGI and gaming.

The Art of Reverse Engineering Reality

Inverse rendering allows AI to extract the fundamental properties of an image — such as geometry and texture — from a simple video. By decoding these attributes, it can reconstruct the original lighting environment, making it possible to place virtual objects seamlessly in real-world footage.

Machines Learning to See: The Role of Data

The foundation of any intelligent system is data. DIFFUSIONRENDERER combines synthetic and real-world datasets to develop a model that understands light interactions across diverse materials and settings. This enables the AI to produce realistic renderings even when faced with imperfect input data.

The Hidden Power of Video Diffusion Models

Unlike standard image-based models, video diffusion models process entire sequences, allowing AI to create consistency across multiple frames. This advancement means smoother, more believable results in applications such as film production, where maintaining continuity is critical.

A New Era for Digital Content Creation

The implications of AI-driven rendering extend far beyond gaming and film. Architectural visualization, fashion design, and even online shopping could benefit from the ability to preview objects in dynamic lighting conditions. The ability to instantly alter textures, materials, and light sources marks the beginning of a new creative revolution.

Unlocking the Future: Applications and Beyond

The ability to manipulate lighting and materials at will has vast implications across multiple industries. In film and gaming, directors can relight scenes in post-production, while game developers can create more immersive experiences with dynamic lighting. Augmented and virtual reality applications can seamlessly integrate virtual objects into real-world environments with accurate lighting. In e-commerce and design, retailers can showcase products in different lighting conditions, allowing customers to visualize furniture or clothing in various settings before purchase. Researchers in scientific visualization can use AI-rendered models to better understand light interactions in different materials, aiding in fields like medical imaging and material science.

The Road Ahead: AI’s Role in the Visual Revolution

AI-powered rendering is still evolving, but DIFFUSIONRENDERER marks a crucial milestone in achieving photorealistic results without traditional constraints. As AI models become more sophisticated, we can expect even greater fidelity, real-time performance improvements, and broader accessibility.

This isn’t just about making images look better — it’s about changing how we create and interact with digital content. With neural rendering, the line between real and synthetic is blurring, opening doors to new creative possibilities that were previously unimaginable.

About Disruptive Concepts

Welcome to @Disruptive Concepts — your crystal ball into the future of technology. 🚀 Subscribe for new insight videos every Saturday!

See us on https://twitter.com/DisruptConcept

Read us on https://medium.com/@disruptiveconcepts

Enjoy us at https://disruptive-concepts.com

Whitepapers for you at: https://disruptiveconcepts.gumroad.com/l/emjml

New Apps: https://2025disruptive.netlify.app/