It’s one thing for a language model to learn — but what if we could make it forget just as convincingly? For example, what if an AI trained on sensitive medical procedures could be made to forget dangerous steps while retaining general medical knowledge? This idea of “concept erasure” is the heart of a new approach designed to make artificial intelligence (AI) models behave as if they never learned particular things, all while preserving their overall capabilities. The brainchild of researchers exploring the ethical boundaries of machine learning, Erasure of Language Memory (ELM) is a groundbreaking method that aims to redefine AI by letting it forget.

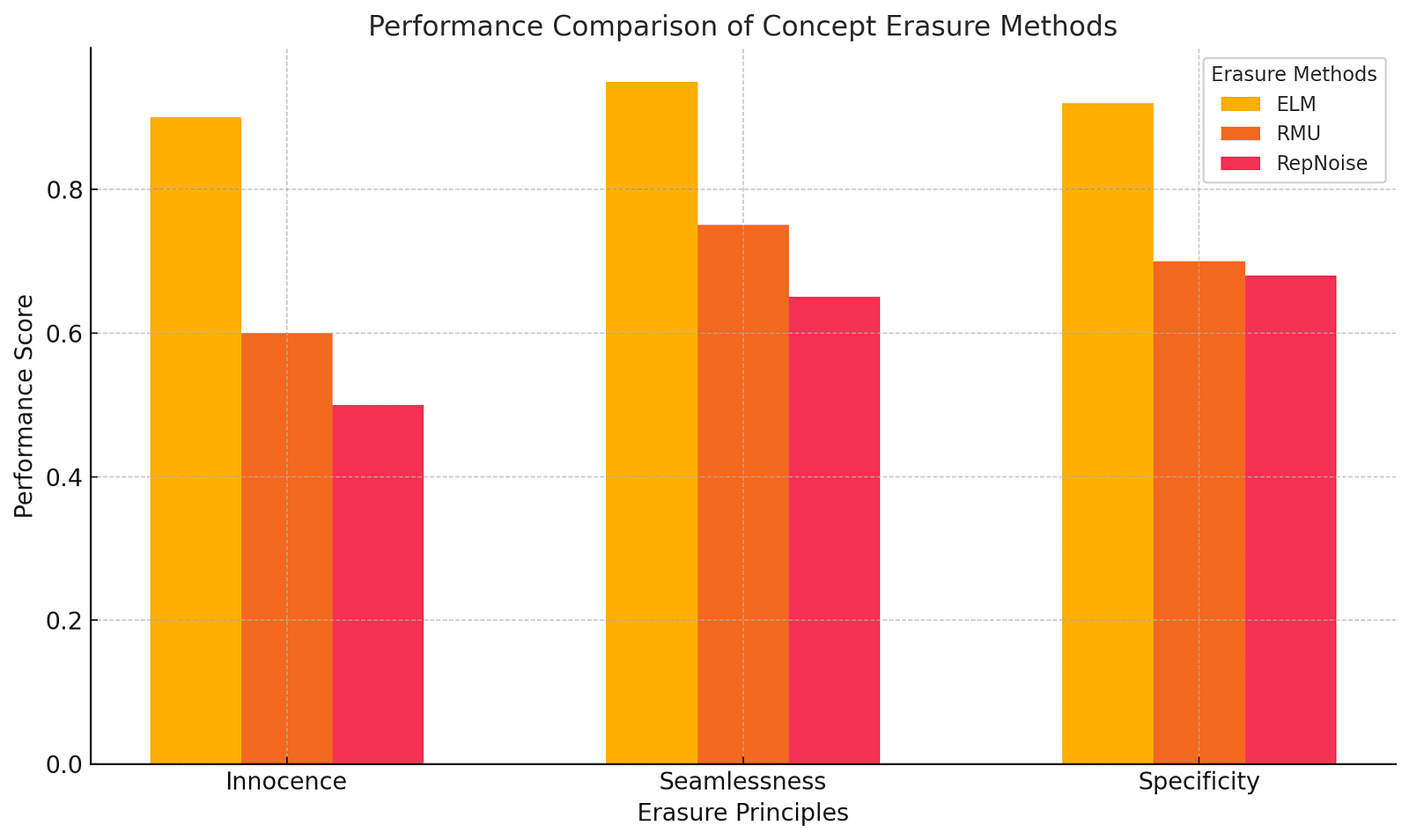

Concept erasure isn’t just about wiping the slate clean. Imagine an AI that understands the fundamentals of biology but is unable to reproduce the dangerous intricacies of, say, bioweapon creation. Erasing knowledge needs to be surgical — it requires innocence (complete erasure), seamlessness (maintaining normal behavior), and specificity (erasing just one thing without collateral damage). It’s like trying to wipe a word from a dictionary while leaving the entire language intact.

Three Erasure Principles: Innocence, Seamlessness, Specificity

The ELM approach aims to preserve what we love about AI while making it forget what we fear. Let’s break down these three magic words: innocence, seamlessness, and specificity. Innocence means wiping out the undesirable concept completely — it’s the idea that if you ask a model about something erased, it genuinely doesn’t know anymore. Seamlessness means the model remains fluent, creating meaningful text when prompted — even when it’s “faking” a response about erased content. And finally, specificity ensures the erasure process doesn’t accidentally delete everything around it; it’s a bit like erasing one actor from a film while keeping the rest of the cast intact and delivering an Oscar-winning performance anyway.

Previous attempts at this concept faced issues; some would reduce unwanted knowledge but damage overall quality, while others left remnants of dangerous ideas. ELM, with its novel use of low-rank adaptations (a technique that reduces the complexity of model changes by updating only a limited number of parameters) and parameter-efficient fine-tuning, aims to bring precision to forgetting — letting language models be forgetful in all the right ways.

The graph above compares the performance of different concept erasure methods — ELM, RMU, and RepNoise — across three key principles: Innocence, Seamlessness, and Specificity.

Why Forgetting Is Hard for AI — and How ELM Does It Right

To achieve this precise erasure, ELM fine-tunes models in a targeted, nuanced way, ensuring they remain highly functional elsewhere. Imagine training a concert pianist to “unlearn” one particular song — without impairing any of their other pieces or making them sound less confident on stage. Similarly, ELM allows AI models to selectively forget specific concepts while ensuring their overall functionality and fluency remain intact. ELM effectively tweaks the internal “memory” of AI models by adjusting how likely they are to generate certain types of responses.

For example, in erasing cybersecurity threats or bioweapons knowledge, ELM uses synthetic fine-tuning data, which refers to data that is artificially generated to help the model adapt without using sensitive or real-world information. This data is designed to ensure the model maintains its ability to respond fluidly when confronted with an erased topic, without reproducing the dangerous content it once knew. The magic lies in this synthesis: a kind of faux memory, where the model’s response is polished and convincing, but devoid of actual knowledge of the erased concept.

Resistance to Adversarial Attacks

The real test of any AI erasure is when you try to break it. ELM’s strength lies not just in making models forget, but in making them resistant to someone trying to force a forgotten memory out again — a “jailbreak.” By employing what could only be called an intricate dance of prompt conditioning, the ELM-modified models held up against adversarial prompting — keeping their “innocence” intact even when probed for erased knowledge by sophisticated attacks.

This robustness doesn’t come from ignorance but from carefully cultivated unawareness. Imagine someone who knows just enough to say, convincingly, “I have no idea what you’re talking about,” without sounding like they’re bluffing. In ELM, this is achieved through prompt conditioning and careful fine-tuning, ensuring the model generates fluent responses while genuinely lacking the erased knowledge. It’s not ignorance — it’s engineered discretion, a deliberate blankness that comes across as ordinary fluency.

The Erased, the Left Behind, and the New Norms

ELM’s journey into targeted forgetting opens up new pathways — not only in creating safer AI but in asking what else we could teach machines to unknow. However, this also raises significant ethical questions: Who decides what knowledge should be erased, and what criteria are used? The implications of selective memory in AI touch on power dynamics, the potential for misuse, and the long-term impact on how machines understand and interact with human values. Could AI “forget” harmful stereotypes or biases? Could we delete entire categories of misinformation? And if so, what responsibility do we take for what gets forgotten or remembered?

As we look ahead, the concept of erasure in AI isn’t just about what we can make these models do — but what we can make them undo. It’s about the dignity of discretion, the beauty of editing one’s memories, and the promise of AI that remembers just enough, while gracefully choosing to forget what we, collectively, decide it no longer needs.

When AI “Unlearns,” It Becomes More Trustworthy

Removing dangerous concepts not only makes AI models safer but also reduces the risk of misuse, creating systems that align better with ethical standards.

It’s Like a Brain Surgery for AI

Concept erasure needs precision. Too broad, and you risk a lobotomy. Too narrow, and the dangerous knowledge lingers. ELM finds a way to be exact.

Forgetfulness Isn’t Ignorance

Unlike outright ignorance, targeted forgetting means the AI can still produce fluent text — it acts as if the erased knowledge was never there, maintaining usability.

The Importance of Contextual Fluency

ELM allows models to handle erased concepts with finesse. They don’t just go blank — they respond in a way that feels natural, shifting topics instead of crashing.

Making AI Forget and Stay Forgotten

Erasing knowledge is one thing, but making AI resistant to adversarial attacks ensures the forgotten knowledge can’t be forced out — turning ELM into an eraser that sticks.

Selective Memory for a Safer Future

The potential for concept erasure extends beyond cybersecurity and literary knowledge. Imagine an AI that can forget biased associations or intentionally dangerous capabilities. With ELM, we’re getting closer to AIs that know just enough to be helpful but are wired not to know too much in areas we collectively decide are better left forgotten. This selective memory is more than a tool; it’s a promise for a safer, ethically conscious era of artificial intelligence.

About Disruptive Concepts

Welcome to @Disruptive Concepts — your crystal ball into the future of technology. 🚀 Subscribe for new insight videos every Saturday!

See us on https://twitter.com/DisruptConcept

Read us on https://medium.com/@disruptiveconcepts

Enjoy us at https://www.disruptive-concepts.com

Whitepapers for you at: https://disruptiveconcepts.gumroad.com/l/emjml