Imagine a world where a camera doesn’t just take photos but transforms those snapshots into intricate 3D models of entire crop fields. These aren’t just aesthetic replicas; they’re biologically accurate, revealing insights hidden beneath dense canopies. Researchers at the University of Illinois Urbana-Champaign and the University of Minnesota Twin Cities have developed a breakthrough in agricultural technology that does precisely this. Their method combines neural radiance fields (NeRF) and inverse procedural modeling to craft what might be considered digital twins of plants.

This isn’t just about solving one problem in agriculture; it’s about tackling a cascade of interrelated challenges. From optimizing yields and managing resources to monitoring carbon capture at scale, the implications are vast. Current methods often fall short when dealing with occluded or dense crop arrangements, leaving gaps in the data. This new approach? It doesn’t just fill those gaps; it builds bridges to insights that were previously unreachable. The implications are transformative — not just for farming but for fields as diverse as robotics, environmental science, and even genetics.

The Science of Digital Plant Twins

Creating these digital twins involves two major steps, each a masterpiece of computational ingenuity. First, NeRF processes the visible parts of plants, estimating depth and geometry much like a sculptor visualizing the final form of a marble block. Then, the system uses Bayesian optimization to predict and reconstruct the parts of plants that are hidden or occluded, ensuring that the final model is both complete and biologically plausible.

Unlike traditional techniques, which often require expensive, labor-intensive data collection, this approach relies on nothing more than standard images taken from drones or handheld devices. Whether working with the intricate trifoliate structures of soybeans or the towering, segmented leaves of maize, the system adapts effortlessly. The resulting 3D meshes are not just visually accurate — they’re simulation-ready, capable of modeling essential processes like photosynthesis. This is precision agriculture for the 21st century: smart, efficient, and accessible.

Why This Technology Stands Apart

Here’s what makes this innovation so groundbreaking:

- Detail Without Compromise: Traditional methods often fail to capture occluded or intricate regions, but this system reconstructs even the invisible.

- Minimal Input, Maximum Output: A few simple images, captured by drones or phones, replace the need for costly 3D ground-truth datasets.

- Universal Application: The system works for a variety of crops, from tightly packed soybean fields to widely spaced rows of maize.

- Plug-and-Play Integration: The outputs feed seamlessly into radiative transfer and productivity models, offering actionable insights.

- Environmental Game Changer: With large-scale, image-based crop monitoring, this method democratizes data critical for tackling global challenges like climate change and food security.

A Paradigm Shift in Agricultural Monitoring

The potential applications of this technology are staggering. Farmers can monitor their fields in real-time, detecting stress, optimizing irrigation, and tailoring nutrient applications with unprecedented precision. Researchers can use the data to model plant growth and genetic resilience, paving the way for hardier crop varieties. Policymakers can rely on its carbon exchange estimates to guide decisions about climate change mitigation.

What’s particularly exciting is how accessible this technology is. Farmers don’t need specialized sensors or expensive tools — just a camera-equipped drone or even a smartphone. This levels the playing field, allowing small-scale farmers to adopt practices that were previously accessible only to well-funded industrial operations.

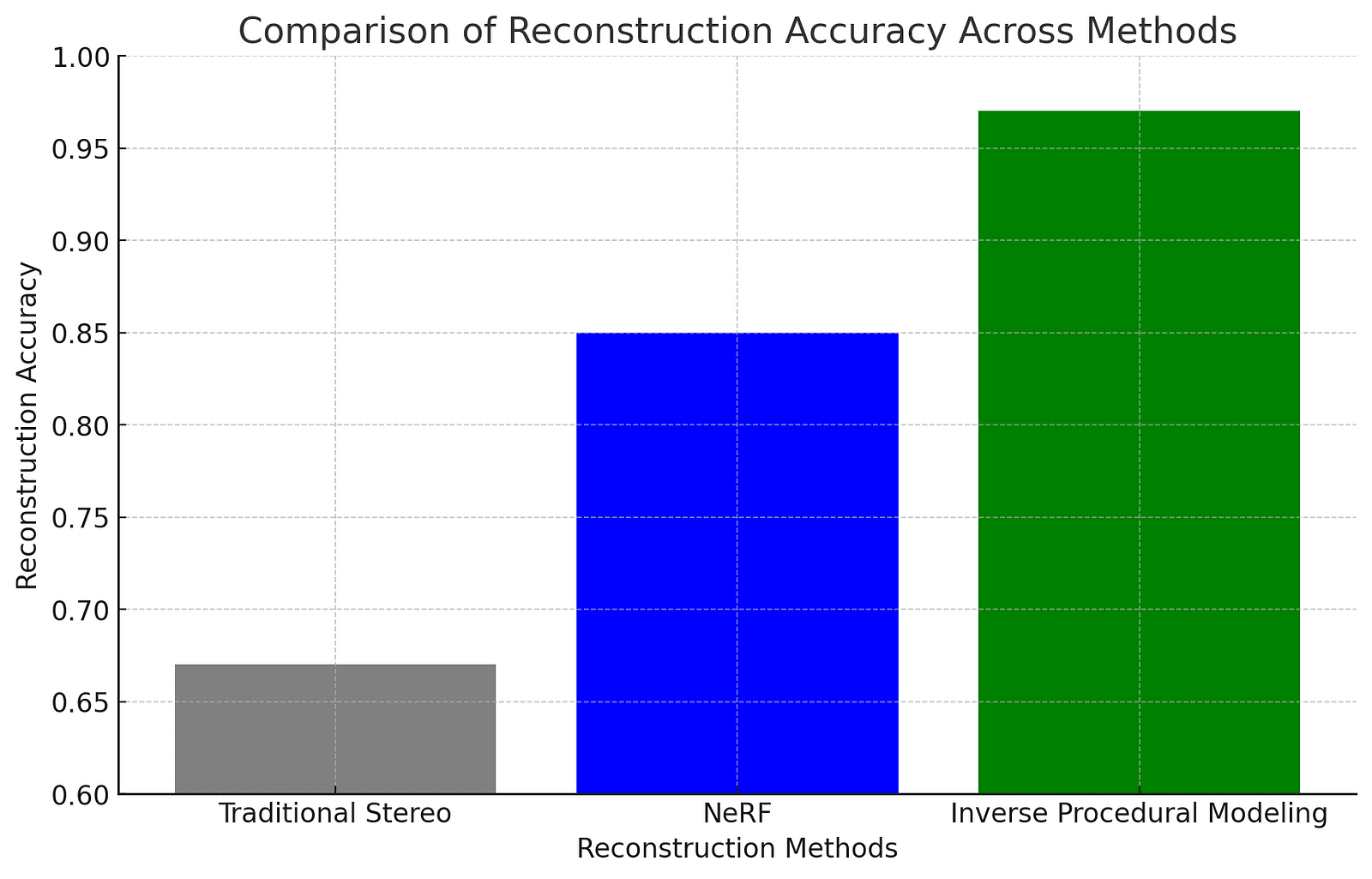

To understand just how revolutionary this method is, we can compare reconstruction accuracy across different technologies. (See below for a graph illustrating the significant improvement offered by this system.)

Five Layers of Innovation: Why This Matters

Breaking down the revolutionary aspects of this method reveals layers of ingenuity and impact:

- Detail is King: Unlike traditional methods, this system reconstructs occluded regions, creating full, biologically plausible 3D canopies.

- Efficiency Without Compromise: Simple drone or phone-captured images are all that’s required.

- Crop Diversity in Focus: Whether it’s the sprawling complexity of soybeans or the structured elegance of maize, the system adapts with ease.

- Insights Beyond the Field: The 3D outputs are ready for simulations that predict productivity, optimize growth, and estimate photosynthesis rates.

- Scaling Climate Action: From field-level carbon monitoring to global policy decisions, this innovation democratizes critical environmental data.

A Glimpse Into the Future of Agriculture

This isn’t just technology — it’s a glimpse into a future where precision agriculture is accessible to everyone. With large-scale implementation, farmers will manage their resources with unparalleled accuracy, reducing waste and increasing yields. The environmental implications are equally profound: scalable carbon monitoring means that policymakers can measure and mitigate the agricultural sector’s carbon footprint with confidence.

The beauty of this technology lies in its simplicity. Farmers, scientists, and policymakers can all benefit, and its implementation requires little more than existing imaging devices and cloud-based software. As climate challenges grow more urgent, solutions like this offer a hopeful path forward. In this future, every field becomes a data-rich ecosystem, and every plant contributes to a deeper understanding of our world.

About Disruptive Concepts

Welcome to @Disruptive Concepts — your crystal ball into the future of technology. 🚀 Subscribe for new insight videos every Saturday!

See us on https://twitter.com/DisruptConcept

Read us on https://medium.com/@disruptiveconcepts

Enjoy us at https://disruptive-concepts.com

Whitepapers for you at: https://disruptiveconcepts.gumroad.com/l/emjml