In the rapidly evolving world of technology, 3D facial animation has become a focal point, especially in realms like virtual reality, video games, and video conferencing. Imagine a virtual world where characters not only speak but also express emotions just like real humans. This is not a distant reality anymore, thanks to groundbreaking studies like “DualTalker: A Cross-Modal Dual Learning Approach for Speech-Driven 3D Facial Animation.”

The Challenge of Realistic Facial Expressions

Creating realistic facial expressions in 3D characters is incredibly challenging. When we talk, our faces convey emotions through subtle movements — a slight twitch of the lips, a gentle raising of the eyebrows, or a quick glance. Capturing these minute details through animation has been a significant hurdle. Most existing techniques treat this process as a single-step problem, focusing solely on matching lip movements with speech. But, this approach often misses the mark in capturing the full spectrum of human expressions.

DualTalker: A Revolutionary Framework

Enter DualTalker, a revolutionary concept developed by researchers Guinan Su, Yanwu Yang, and Zhifeng Li. DualTalker doesn’t just look at the problem one-dimensionally. Instead, it introduces a cross-modal dual-learning framework. This method enhances the efficiency of how data is used and better understands the relationship between spoken words and facial expressions.

How Does DualTalker Work?

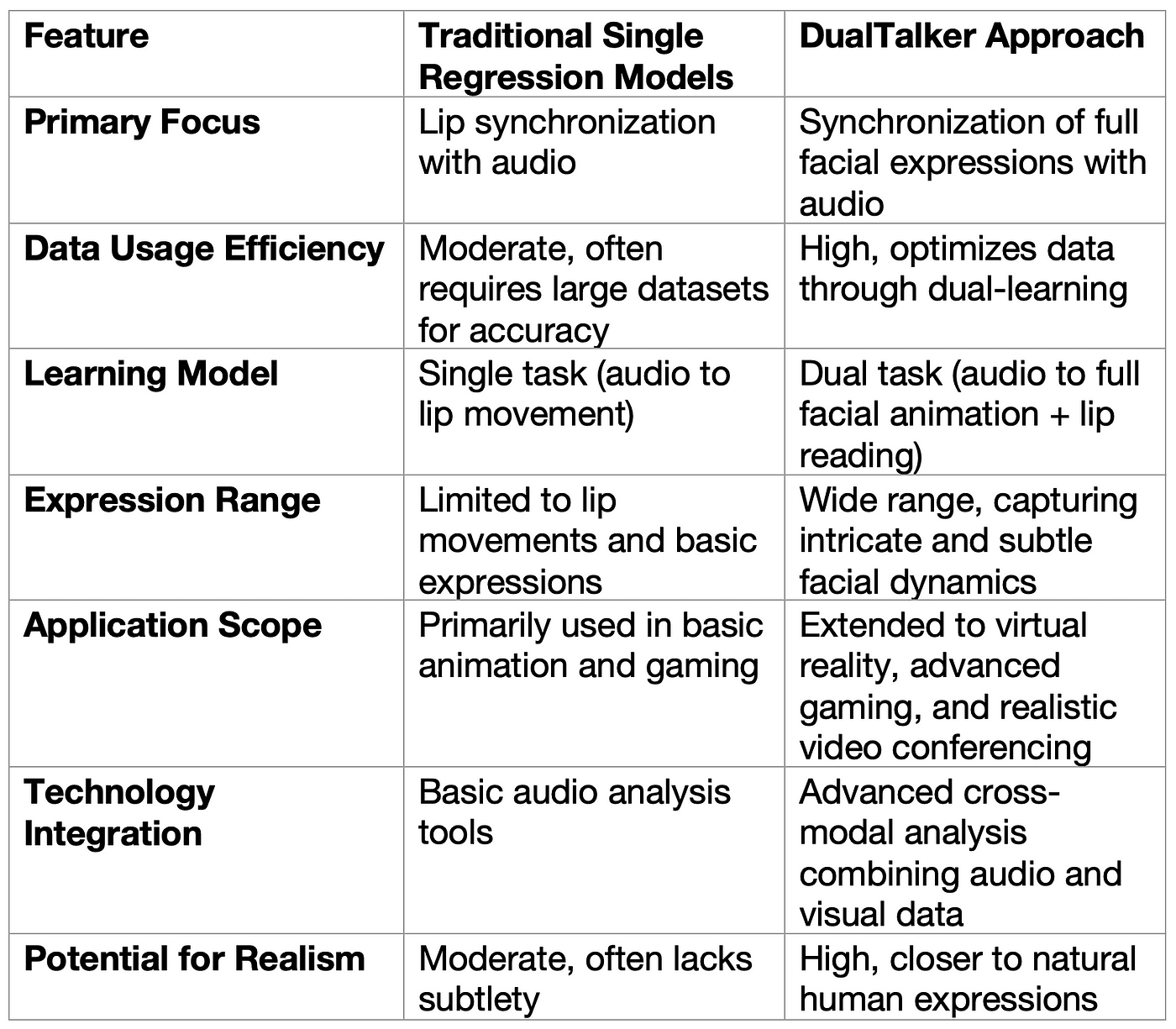

To understand DualTalker, let’s dive into its two main components: audio-driven facial animation and lip reading. The system learns to animate facial expressions driven by audio cues (like spoken words). Simultaneously, it also learns the art of lip reading, which is the ability to understand spoken words just by observing lip movements. This dual-task approach allows DualTalker to capture a more comprehensive and nuanced range of facial expressions compared to traditional models shown in Table 1.

Table 1. Comparing Traditional 3D Facial Animation with DualTalker Approach

The Impact of DualTalker in Various Fields

The implications of DualTalker are vast. In video gaming, it can create more lifelike and emotionally resonant characters. In virtual reality, it enhances the realism, making experiences more immersive. For video conferencing, imagine a future where 3D avatars replace our video feeds, perfectly mimicking our real-time expressions, bringing a new level of engagement.

Future Possibilities and Challenges

The development of DualTalker opens doors to numerous possibilities. However, the journey isn’t without challenges. One of the biggest hurdles is ensuring that the system can accurately capture the diversity of human expressions across different cultures and languages. Additionally, as the technology progresses, ethical considerations, particularly concerning privacy and the potential for misuse, need to be addressed.

Conclusion: A Step Towards a More Expressive Digital World

DualTalker marks a significant leap in the field of 3D animation, bringing us closer to a future where digital expressions mirror human intricacies. As this technology evolves, it promises to transform our digital interactions, making them more expressive and real. The journey of DualTalker is just beginning, and its potential impact on our digital lives is immense and exciting.

About Disruptive Concepts

Welcome to @Disruptive Concepts — your crystal ball into the future of technology. 🚀 Subscribe for new insight videos every Saturday!